On Demand QA Environments

The Problem

Let's just lead with this. The Engineering team at Numerator will double in the next year...

An engineering team generally doesn't go from 20 - 40 or 40 - 80 without some demand from customers. This demand from customers requires more engineers to build out features, fix bugs, scale systems, and to provide support. As an engineering team scales there becomes an inherent need for?

- More Snacks in the Office

- Bevi and LaCroix to get that seltzer fix

- Tools to make developing software faster

Kind of an unfair question, because all three are right. But, I don't feel I'm qualified to write a blog post about carbonated water and snacks. Meaning the most right answer at this particular moment in time is number three. What can sometimes become an afterthought of a successful engineering team is a development environment where developers can work fast and deliver those million dollar features.

For a fast-growing startup the development environment at Numerator has been great. Vagrant environments are fired up across multiple different teams. With just a few command line commands a developer environment is running a nearly pseudo-production environment. This covers almost all local testing, but what happens when you want to test on the real thing, or at least a little closer to the real-thing?

There are QA environments for that. Prior to this change there were three of them (for a team of eight or so engineers), they are simply a few EC2 instances that comprise most of what's in a production environment. In order to get another one, a developer would likely have to ask an DevOps person to bring up another stack. If the DevOps person is busy, this could cost the developer time, and could possibly delay a feature launch. Managing these environments across multiple database migrations across multiple branches caused a number of headaches as well. The aforementioned issues plus a few more led us to the develop a system that could support on demand creation of QA environments.

What we wanted (needed) to accomplish

- New environments in minutes not days

- Environments expire, no need to waste $$$ on stale environments

- Notifications and user-feedback on stack creation

- Environments can be created by anyone with proper Jenkins credentials

- Non-engineers can view/test what developers are working on

With that in mind, we got to work. Most of the pieces were already in place, it was just a matter of putting them altogether. We could combine technology we were already using into a robust system that could improve everyones workflow. Let's take a look at the implementation details.

The Infrastructure

The infrastructure/architecture of the project is composed of Terraform, Ansible, and a few AWS services to store and keep track of state.

Terraform

Module Composed of

EC2 Instances

Route 53 Records

ELB

Ansible

Dynamic Inventories (More Information Below)

Playbook for setup on the instance

Playbook for deploy on the instance

AWS Lambda

QA Status Slack Command

Destroy stacks that are expired

DynamoDB

Store information about Stacks

Making it user friendly

Jenkins Pipelines

Create a new stack

Deploy an existing stack

Destroy a stack

See It

Create a new Stack

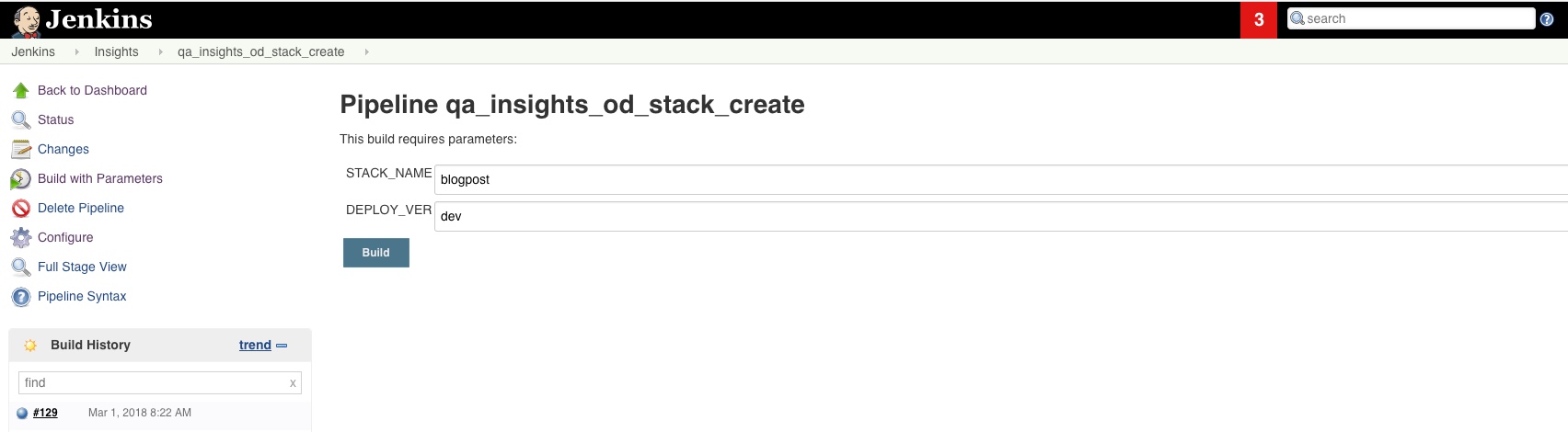

A user can create a stack with a couple text entries, and a button click. A photo from the Jenkins UI.

Wait for it

Brand new stack will be up in ~15 minutes.

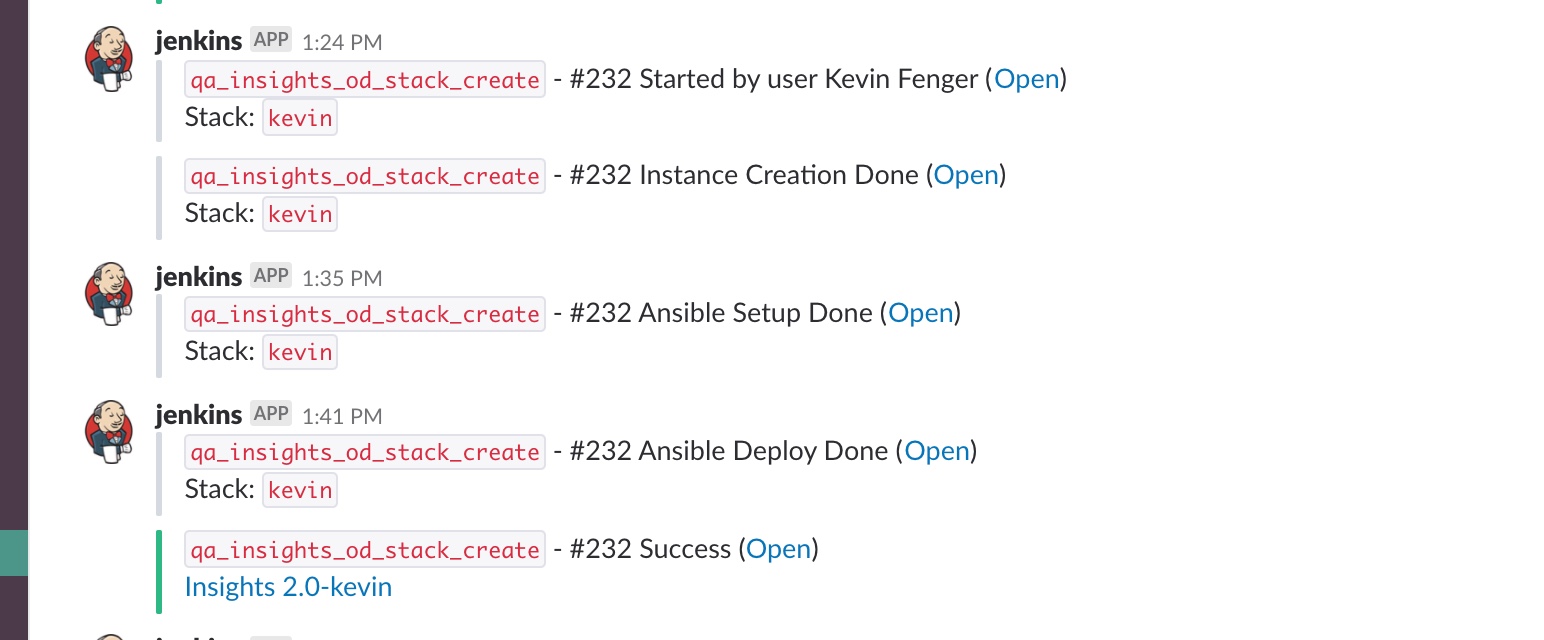

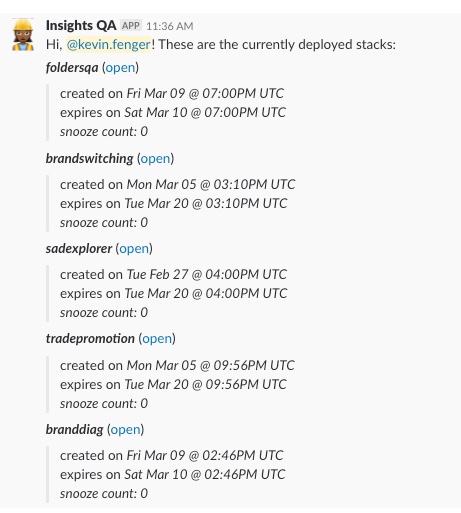

Track Status With Slack

Or Jenkins

Deploy to the new stack

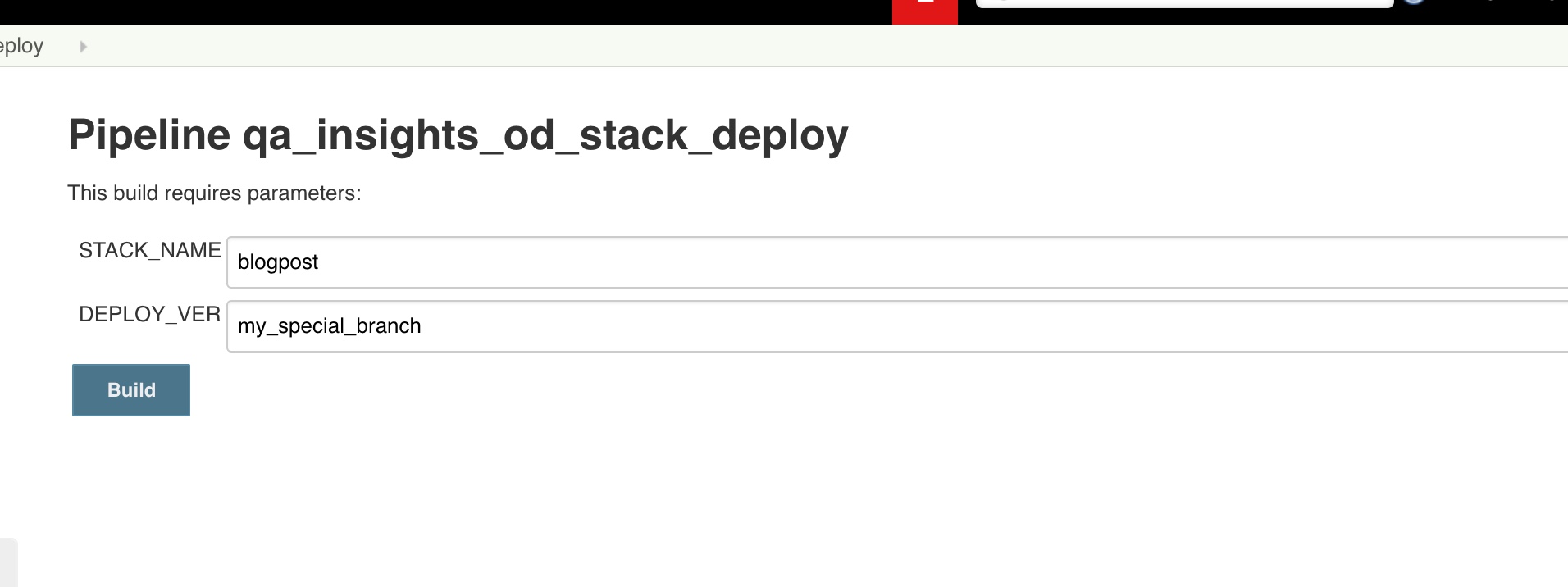

Made a modification and need to see the new code? Once the stack is done, a developer can deploy at will to it. Via...you guessed it another Jenkins pipeline.

Track Instances Created

At any point you can use a handy slack command to view all of the stacks currently created.

Destroying the Stacks

The stacks have a default lifespan of 24 hours. A scheduled Lambda task runs every fifteen minutes and checks to see if any stacks need destroyed. If there are any it calls terraform destroy on the stack. This portion could use some improvement and we could monitor usage to better know when to destroy a stack, but for now we just destroy based on a timestamp.

Code Examples

Dynamic Inventories

Since Numerator is an AWS shop, we took the ansible dynamic ec2 inventory script and modified it to fit our needs. Once those modifications were made we could pass this python script via a -i parameter to our ansible setup and deploy scripts.

The output of the script is an inventory file json style.

{

"qa": {

"children": [

"cleaner",

"web",

"worker"

],

"vars": {

"url": "elb.net",

"s_names": "10.0.0.2 elb.net"

}

},

"cleaner": [

"10.0.0.3"

],

"web": [

"10.0.0.2"

],

"worker": [

"10.0.0.1"

],

"stateful": [

"10.0.0.4"

]

}

And the Jenkins Pipeline/Groovy code to call that.

ansiblePlaybook(

playbook: 'plays/project/setup_qa.yaml',

inventory: 'inventories/custom_ec2.py',

credentialsId: 'creds',

colorized: true

)

Jenkins Pipeline - Declarative Example

Let's look at a somewhat full example of a Jenkins pipeline. Once the syntax is grasped, it's fairly easy to apply most use-cases to it.

Basic Structure

pipeline {

agent {

label 'my_deploy_agent'

}

environment {

ENV_VAR_1 = 'test'

TERRAFORM_BRANCH = 'master'

ANSIBLE_BRANCH = 'master'

}

stages {

stage('Stage1') {

step(s)...

}

stage('Stage2') {

step(s)...

}

stage('StageN') {

step(s)...

}

}

post {

success {

...

}

failure {

...

}

}

}

Looking at a Stage

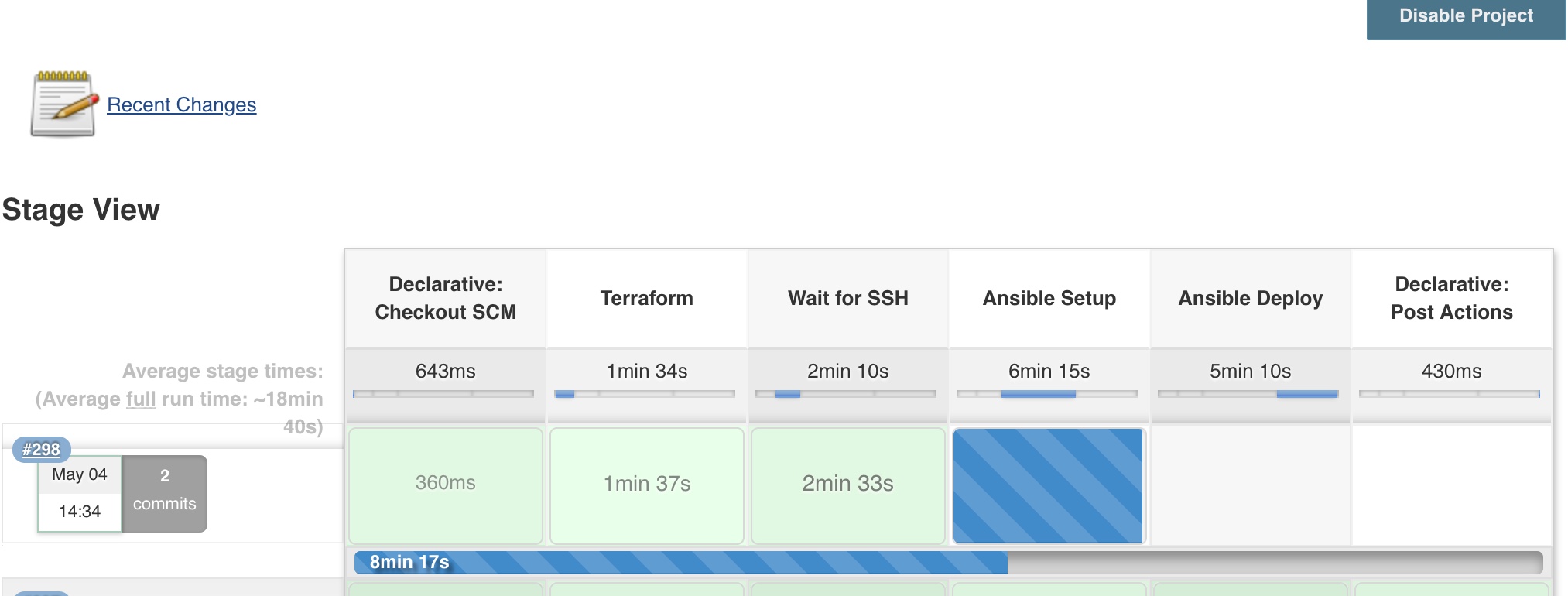

The production deploy of the create stack pipeline has four stages.

- Terraform ->

create the instances necessary for a QA environment - Wait for SSH ->

This is an ansible playbook that pings the server until it's ready to accept ssh connections from a jenkins user. - Ansible Setup ->

playbook that runs setup - Ansible Deploy ->

playbook that runs deploy

A focus on the Terraform stage

Each stage has steps, a stage can have many steps. The steps are as follows.

- Send a Slack Message to inform the user that we've started Stack Creation

- Checkout the Terraform Git Repo

- Run the Terraform Command

And here's the code to do just that.

stage('Terraform') {

steps {

slackSend(

color: "#D4DADF",

message: "`${env.JOB_NAME}` - #${env.BUILD_NUMBER} Started by user ${env.USER} (<${env.BUILD_URL}|Open>)\nStack: `${env.STACK_NAME}`",

channel: "#your-slack-channel")

git(

credentialsId: 'ABCDEF',

url: 'git@github.com:git_repo',

branch: env.TERRAFORM_BRANCH)

withCredentials([[

$class: 'AmazonWebServicesCredentialsBinding',

credentialsId: 'jenkins-credentials',

accessKeyVariable: 'AWS_ACCESS_KEY_ID',

secretKeyVariable: 'AWS_SECRET_ACCESS_KEY'

]]) {

sh(script: 'terraform apply qa-bi-ondemand stackname=${STACK_NAME} verbose=1', returnStdout: true)

}

}

}

Conclusion

We've been using the On-demand QA environments for a couple months now, and it has drastically improved workflow amongst our engineering team. It's also had some quality of life improvements for the DevOps engineers. No longer do we have Slack conversations of who is going to be using QA or Staging environments next. Now the conversations have to turned to something more riveting things like: What constitutes a sandwich?.

If more examples or help is needed constructing these environments feel to reach out.