Failure as a Service

April 2017, about 2 months after I joined the Insights Platform team in San Francisco, we noticed an all-too-familiar issue for a startup: we were moving so quickly (at least 1 release per week) that we weren’t thinking about edge cases and scale. We had 50% coverage, a one-person QA team (Nick) and an under-provisioned staging environment, which meant that we’d often have bugs show up in production that could have been caught earlier. Not just silly bugs, "hotfix required" bugs. The Sentry channel on Slack looked like our (only) web host was having an existential crisis. We didn’t have a PM to help us prioritize or to prevent scope-creep, so we’d often had to rush on features. To make things worse, we were manually deploying to production using Ansible in a deploy box. This meant that every time we had to hotfix something, someone (me or Edward, our team lead back then) would have to work after 5pm to update production. It was not an easy process.

That same quarter, we came up with several solutions to make our (my) lives easier:

- Keep releasing every week, but create a release branch (git flow) and allow around a week of QA/manual testing

- Set up coverage checks for each PR on codecov.io to make sure that number is going up, and to make sure our most critical code was 100% covered

- Add tickets to PRs; QA agreed to keep a spreadsheet and test requirements for all commits

- Keep unit-test runtimes to ~5 mins

- Improve deploy times to ~5 mins

- Help our DevOps (Liberty) get us to Push-Button Deploys via Jenkins

- Hire a PM (Nikka)

Around that time, however, I felt like the team needed a slightly more fun way to improve our bug rate. I felt like we needed something that wouldn’t slow us down but would (1) make us think a bit more before merging something; (2) make it a little less annoying for Edward and I to deal with hotfixes. I was reading an old book about Engineering culture and they had written about the concept of a bug jar (like a swear jar). I thought “if I’m gonna be working late, whoever caused the issue is paying for my drinks!”

How it started

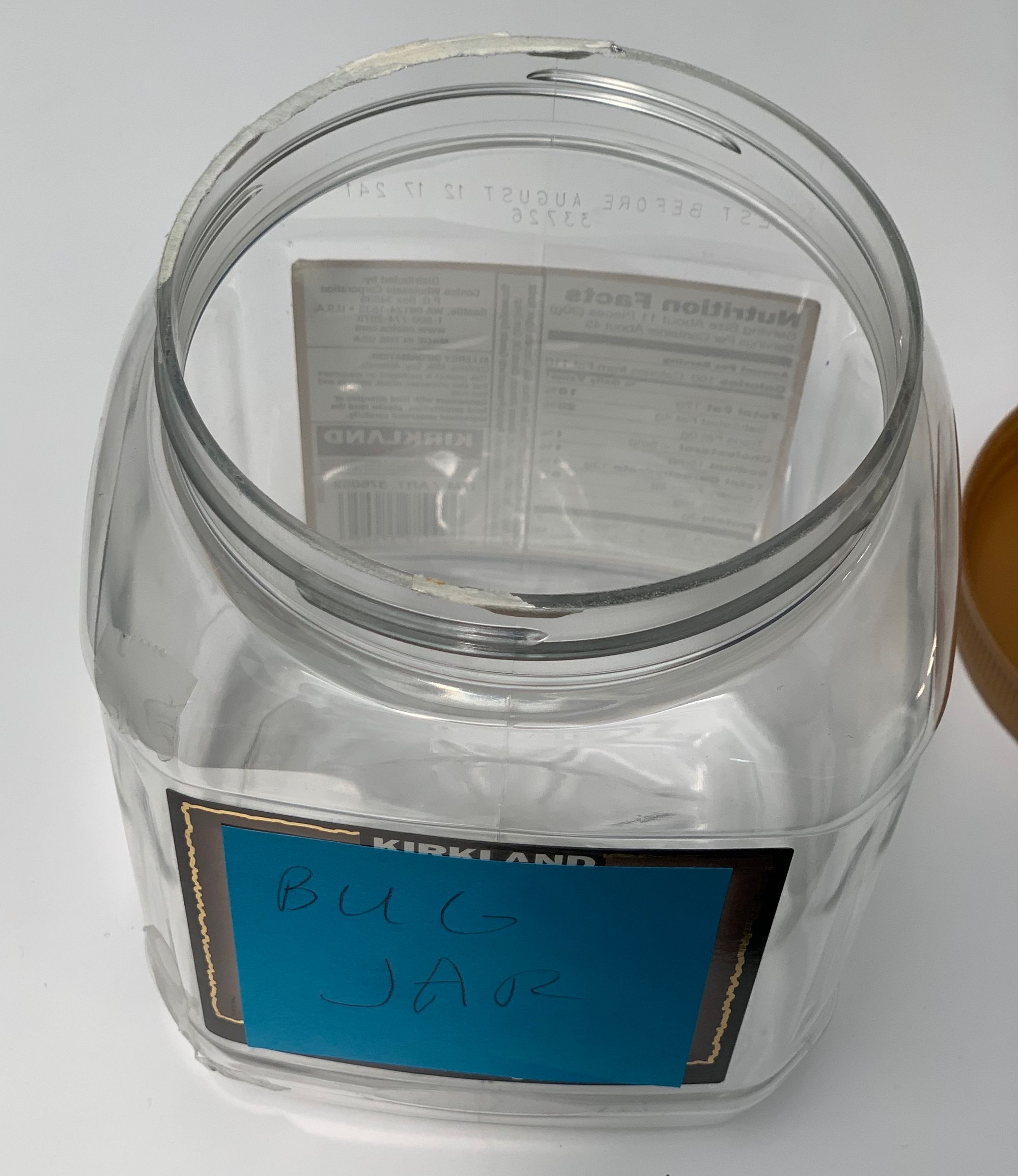

One of our Backend Engineers (Feldman) was hoarding empty jars of chocolate-covered almonds. I grabbed one of those and taped a “Bug Jar” note on it. The jar was clear so you could see how much money was in there.

If a particularly annoying bug was found in production, whoever committed it would add $2. If a bug was found by Nick, case-by-case whoever caused it would add $1. You still have to fix the bug. The rule was loosely enforced (no one would be forced to pay) and the proceeds would be used for “drinks sometime.” To get the ball rolling, I submitted the first $10 (for a bunch of annoying bugs I introduced in my short tenure).

Results

First 30 Days (April 2017)

We have around $20 and a fake bug in the jar. Some people are into it, others are trolling, but overall bug rates and severity are going down (probably because of the other initiatives). We release Instant Surveys, which required quite a few long nights and a week’s offsite in Chicago. Sometimes I fantasize about the bug jar running empty – no more bugs in production.

First year (April 2017 - April 2018)

Money piling up ($100)! We brainstorm additional bug jar ideas. Should we charge the reviewers? (Nah) Should we use the money for a steak dinner? (ooh, maybe) Can we use the money to pay for another Eng to upgrade us to Python3? (in San Francisco? LOL) An engineer breaks search and pays $5: bigger screw-up, bigger donation (we only asked for $2). We let new hires off the hook for a couple of silly bugs.

Instead of asking people for change, I push change on people (hey, Amber, you want twenty $1 dollar bills?) to make more room in the jar. I realize I’m its biggest contributor.

We pass 1000 (enterprise) users on Insights. Not Facebook numbers, never going to be, but good for a 1-year-old Business Intelligence Platform. Part of me wants to charge every internal user who says the platform is slow.

Second Year (April 2018 - April 2019)

Some people leave, some people join; overall, the team grows with the bug jar (20 people, over $200). Foreign currency shows up (pesos, euros). Few people carry cash, so I get a lot of bug emojis on my Venmo. People start asking if we’re ever going to spend the money. I say: “One day...”

We make a $40 withdrawal for a beer Happy Hour at work on a particularly hot and stressful Friday. Two weeks later, I forget about the HH and think someone has taken money from the jar, until I check my credit card statement and don’t see the $40 transaction for beer. After that, I keep tabs on the amounts on Slack.

Insights easily hits 2K users and 500K jobs. We get way more data (500 Million receipts, 100K panelists), and build more and more complex reports on top (Moments of Truth? Event Analysis?? Scorecards???). Naturally, people complain that our platform is slowing down. We do a rebrand and the beta of a UK version in October. In April, we finally get Python3-only in production (not without many offerings to the bug jar). The engineer in charge of the switch forgets to upgrade our deploy stack first; $2. Fail forward.

Somehow, I’m still the biggest contributor (but I’m managing now?). Old bugs and new bugs. It’s less about dinging bugs and more about feeling like a team. A literal buy-in. Paying the bug jar feels like absolution.

I hope the new hires make less mistakes than I did. I hope they never pay the jar as much as I have.

Now (April 2019 - Present)

We have engineers in Chicago, New York, Ottawa, as well as SF. People wonder if we should start a Chicago bug jar. I keep thinking Venmo should have a feature for this use case. We still haven’t spent the money, and it’s starting to get musty. Maybe one day. It’s a small monument to how far we’ve come.

Over a million jobs on Insights (I’ve stopped counting). We’re launching new Surveys; we’ve launched 5 new reports this year, and a ton of refreshes. We have our eye on Vertica 9 and EON mode with auto-scaling groups: the next evolution of auto-scaling clusters. One of our engineers finds better projections for our Data Warehouse, and we have them in production a week later -- next day, reports are 2-3x faster. Takes a few days for people to say anything. We think, why didn’t we figure it out earlier? Meh. We’ve learned not to be too hard on ourselves.

Coverage is creeping up, and everyone is writing tests. We’re entrusting our new hires with typing and profiling our code, so we can keep improving. More and more of our engineers start to branch outside their specialties, and we encourage them.

I write a data migration that takes more than 8 hours when we run it; I optimize and change it to a distributed set of tasks and it takes minutes. Two for the jar. We’re failing less, but sometimes we’re also failing bigger. It makes us stronger.

Some of the new hires have already paid the toll. I hope they end up paying the jar more than I have. Plenty has changed in two years, but the bug jar remains -- proudly tallying our failures, hungry for us to be even bolder.